GPU vs CPU Inference: Real Truths

The real truths most people never tell you

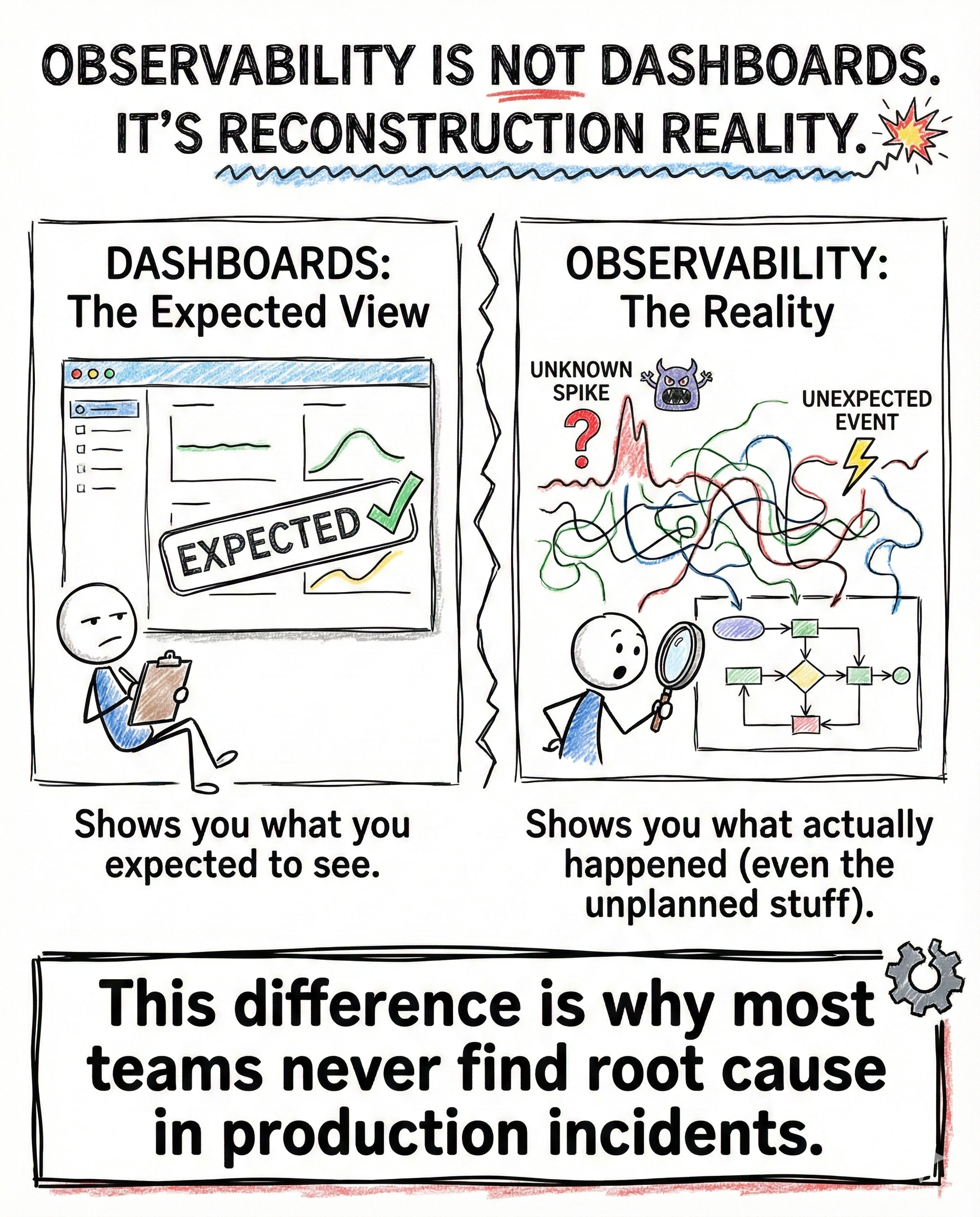

This debate is usually framed the wrong way.

It is not about raw power.

It is about architecture, memory access, and parallelism.

Once you understand that, the tradeoffs become obvious.

Why this decision matters

Choosing between CPU and GPU directly impacts:

- cost

- throughput

- latency

- concurrency

- energy usage

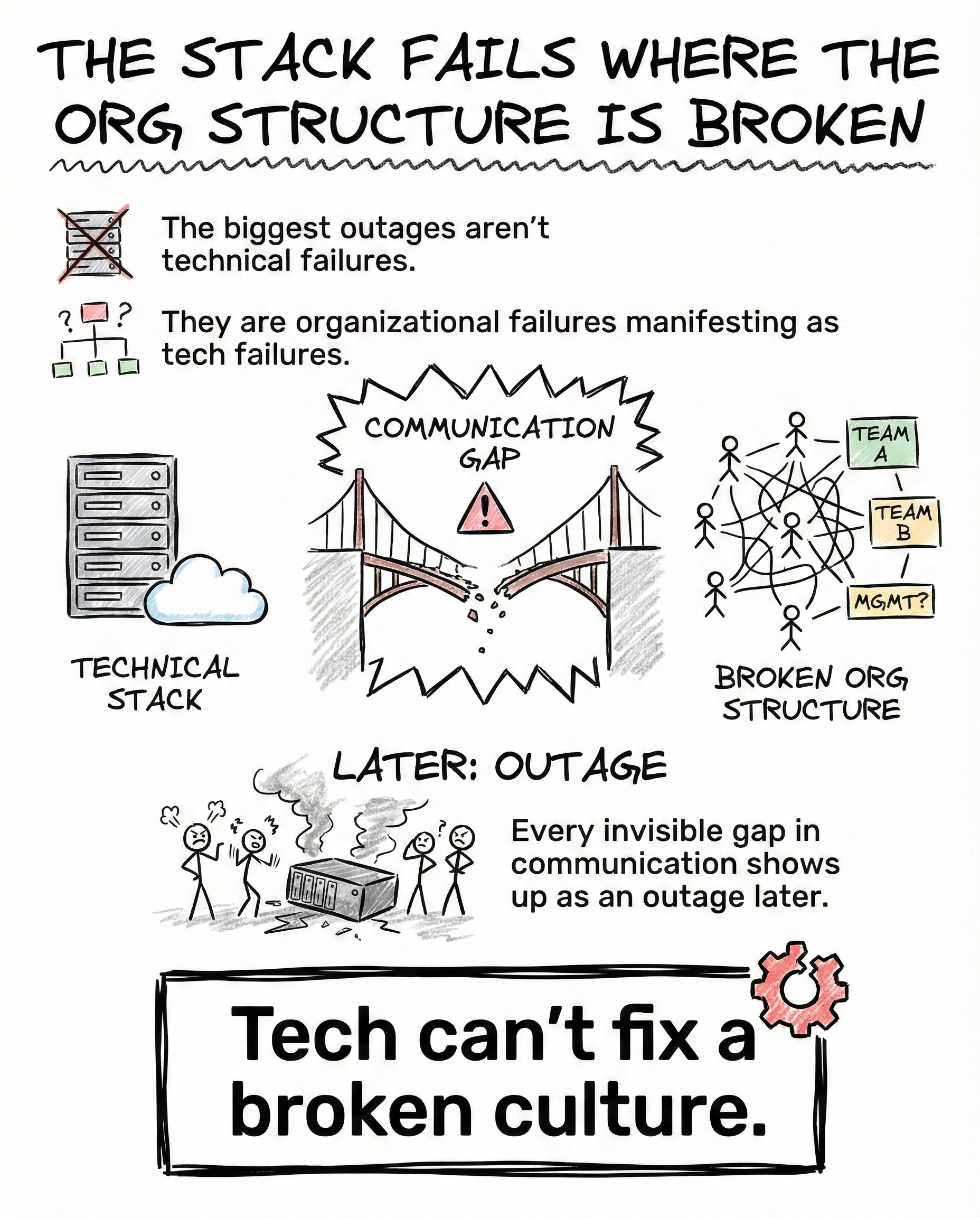

A lot of teams overspend on GPUs not because they need them, but because they never learned how inference actually works.

How GPUs and CPUs are fundamentally different

GPUs

GPUs are built for one thing: parallel math.

- massively parallel execution

- optimized for matrix multiplication

- extremely high VRAM bandwidth

- excellent for transformer workloads

This makes GPUs ideal for large language models where attention and linear algebra dominate compute.

CPUs

CPUs trade parallelism for flexibility.

- handle quantized models efficiently

- significantly lower cost

- better at branching and control flow

- easy to deploy locally

For many workloads, especially during development, CPUs are more than enough.

What actually works in practice

Hard-earned truths from real deployments:

- GPUs outperform CPUs for large models above ~20B parameters

- CPUs handle 2B to 13B models well when quantized

- GPUs shine when you need high concurrency

- CPUs win in cost-sensitive and offline setups

This is exactly why local LLMs thrive on CPUs.

They are not underpowered.

They are correctly matched to the workload.

Why people get this wrong

Many developers assume:

GPU equals faster

CPU equals slow

Reality is more nuanced.

If your model is small and quantized, GPU overhead can outweigh the benefits.

If your workload is bursty or offline, CPU inference is often the smarter choice.

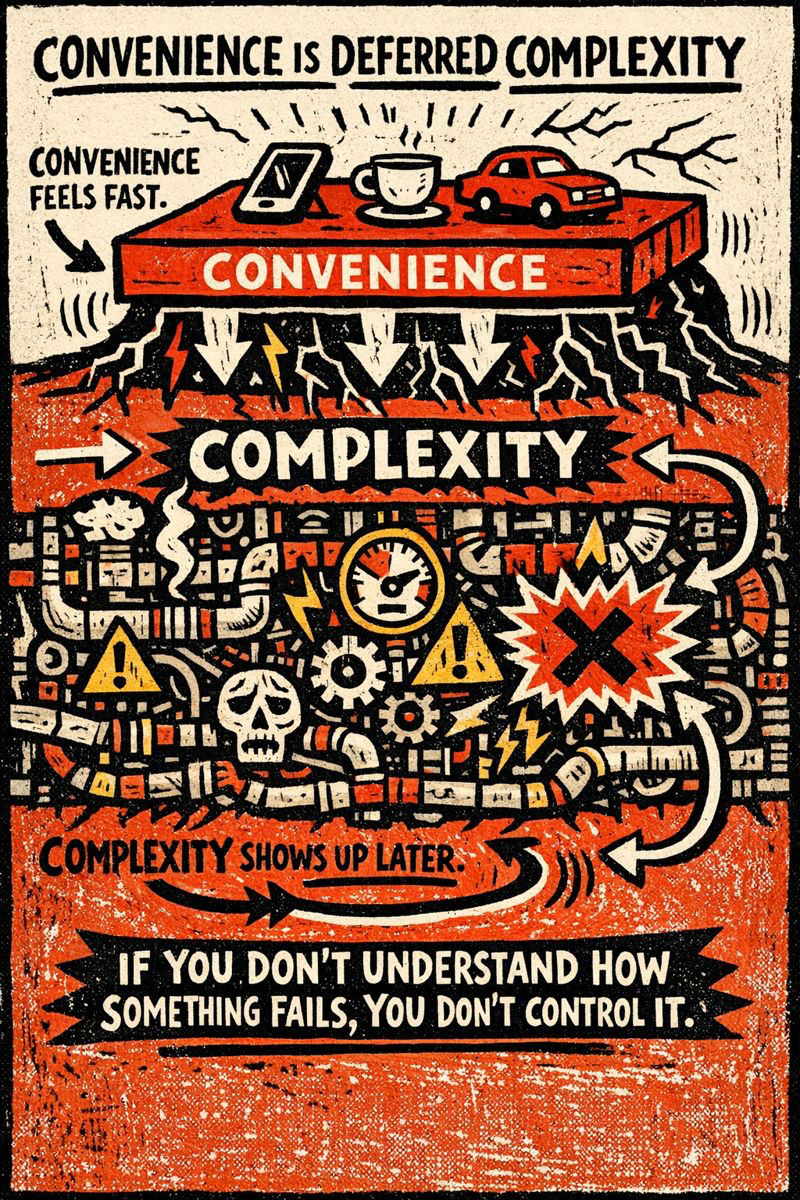

Hardware mismatch is an architectural failure, not a performance one.

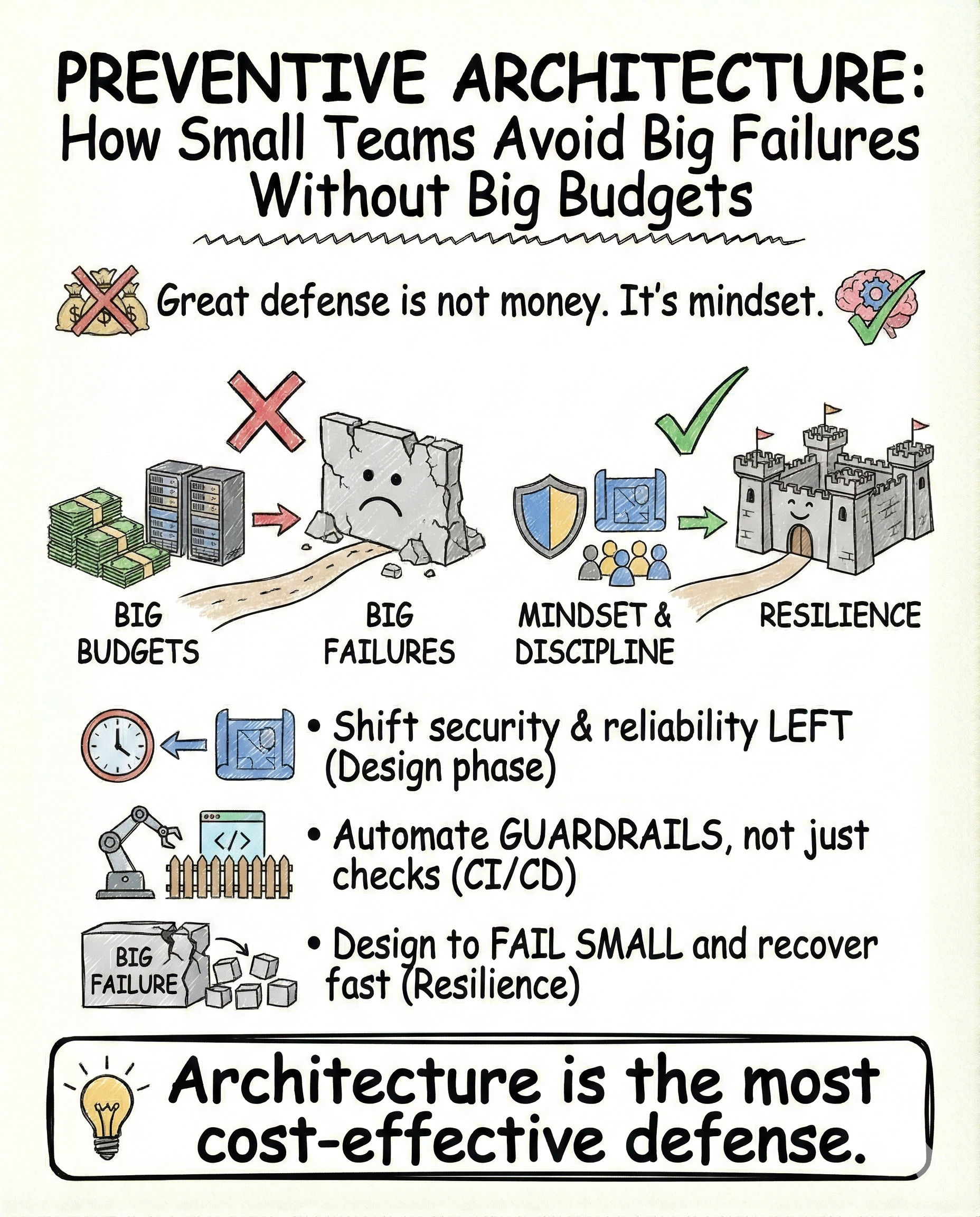

How I actually deploy systems

My approach helps keep systems lean:

- local CPU inference for development and experimentation

- GPU-backed cloud inference for production workloads

- hybrid setups for automation and background jobs

Once you understand the hardware, deployment stops feeling like guesswork.

The real takeaway

Inference performance is not about buying the biggest machine.

It is about choosing the right architecture for the job.

That is what separates experimentation from engineering.

Closing

This post is part of InsideTheStack, focused on practical AI infrastructure decisions that actually matter.

Follow along for more.

#InsideTheStack #LLMInfra #GPUvsCPU